AI categorizes your content automatically with MediaTag

Tag your assets

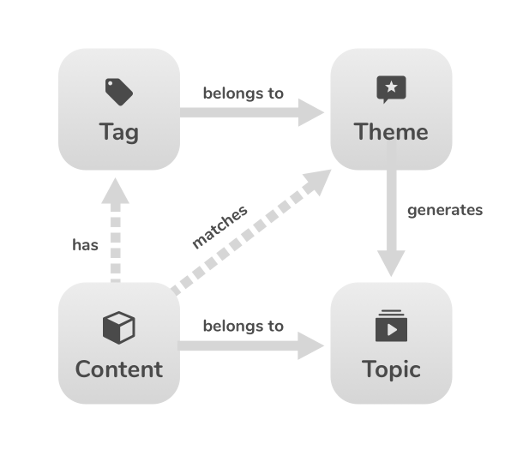

Content metadata is a treasure trove that can be used for much more

than building your end-user UX. Imagine if some sort of oracle could

take advantage of this information to return relevant content

based on a list of keywords or tags. In short, this is the new

power MediaTag endows you with.

MediaTag automatically

analyzes metadata and other textual information (e.g. subtitle files or

credits obtained from media files through OCR) to label each content entry

with a cloud of related tags. Autotagging is a complex

process involving natural language processing, summarization algorithms,

matching with ontology concepts, and more.

Making the most

of your media catalog

using MediaTag

Case Study

The customer, one of the top-five global TV networks, has resorted to MediaTag in order to assist their Europe and Africa editorial teams in the process of categorizing an asset library of 50K+ entries and preparing thematic distribution lists for customers across the region. MediaTag was fed with internal metadata files, low-quality videos for extraction of credits...

Features

Integration with metadata sources

-

Supports ingestion of metadata from MediaStream or other external repositories in ADI format

Supports ingestion of metadata from MediaStream or other external repositories in ADI format

-

Can use additional textual information like subtitle files (VTT format) or credits extracted from provided mp4 files trough an internal OCR module

Can use additional textual information like subtitle files (VTT format) or credits extracted from provided mp4 files trough an internal OCR module

Operation

-

Background automatic autotagging

Background automatic autotagging

-

Supports external concept taxonomies/ontologies for enriched autotagging

Supports external concept taxonomies/ontologies for enriched autotagging

-

Web-based editorial tool for:

Web-based editorial tool for:

- Content/tag semantic browsing

- Theme creation and management

- Topic creation, management, versioning and publication

Integration

-

Works seamlessly with MediaStream for automatic tag enrichment

Works seamlessly with MediaStream for automatic tag enrichment

-

Standalone mode: publication through export API or generation of Excel reports

Standalone mode: publication through export API or generation of Excel reports